机器学习——(基石)作业1

总共20道题目。

1. Which of the following problems are best suited for machine learning?

Classifying numbers into primes and non-primes

Detecting potential fraud in credit card charges

Determining the time it would take a falling object to hit the ground

Determining the optimal cycle for traffic lights in a busy intersection

Determining the age at which a particular medical test is recommended

这个题目比较简单,其中1和3很明显不是机器学习问题,我们清楚质数与非质数的规则,也知道物体下落公式,不需要机器去学习,其他正确,答案是2,4,5.

For Questions 2-5, identify the best type of learning that can be used to solve each task below.

2. Play chess better by practicing different strategies and receive outcome as feedback.

reinforcement learning———加强学习,因为需要不断加强,学习过程是连续的。

3. Categorize books into groups without pre-defined topics.

unsupervised learning————很明显是无监督学习。

4. Recognize whether there is a face in the picture by a thousand face pictures and ten thousand nonface pictures.

supervised learning————数据已经标好标签。

5. Selectively schedule experiments on mice to quickly evaluate the potential of cancer medicines.

active learning————实验的次数是有限的,可能无法做出海量次数的实验,因此需要根据少数实验结果(即部分样本有标签),这实际上是半监督学习的一种,当遇到机器无法决断的时候再去人工标签,也就是主动学习。

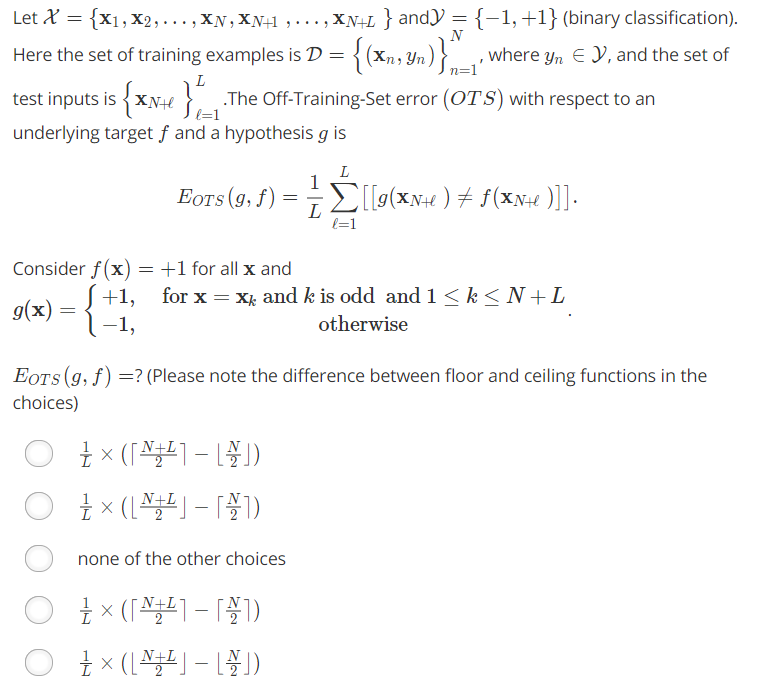

Question 6-8 are about Off-Training-Set error.

6.

这个题目中样本\(x_1,...x_n\)为训练集,而其余L个为测试集。正确的分类是所有的都是+1,而我们得到的\(g(x)\)是样本中下标为奇数的是+1,也就是大概会错一半。具体错多少? 总体样本错的也就是⌊\(\frac {N+L} 2\)⌋,而除去训练集中会错的⌊\(\frac {N} 2\)⌋答案是第五个。

7. We say that a target function \(f\) can "generate" \(\mathcal{D}\) in a noiseless setting if \(f(x_n)=y_n\) for all (\(x_n\), \(y_n\)) \(\in \mathcal{D}\). For all possible \(f \colon \mathcal{X} \rightarrow \mathcal{Y}\), how many of them can generate \(\mathcal{D}\) in a noiseless setting?

这个题目意思容易让人曲解,实际上问的是外面测试集大小为L,那么有多少种可能的f,f满足D中的样本,但是对于测试集中是无所谓的,因此可能的f有\(2^L\)个。

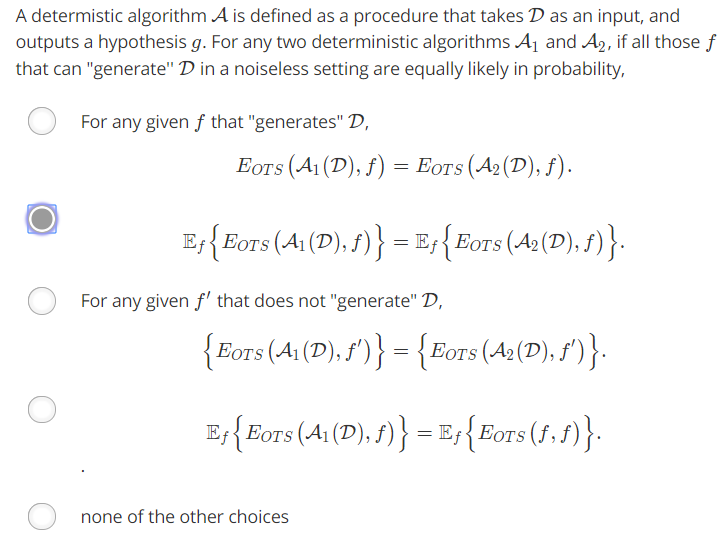

8. 这个题目主要在考察的是NFL定理。如果所有可以generateD的f是等概率的,也就是对于测试集中样本的+1,-1是完全随机的,那么所有学习算法得到的误差期望都是一致的。所以选2.

这个题目主要在考察的是NFL定理。如果所有可以generateD的f是等概率的,也就是对于测试集中样本的+1,-1是完全随机的,那么所有学习算法得到的误差期望都是一致的。所以选2.

For Questions 9-12, consider the bin model introduced in class.

9. Consider a bin with infinitely many marbles, and let μ be the fraction of orange marbles in the bin, and ν is the fraction of orange marbles in a sample of 10 marbles. If μ=0.5, what is the probability of ν=μ?

这是一道比较简单的概率题。u = 0.5,求选出10个出来有5个是橘色的概率。因为这个仓库箱子里弹珠的个数是无穷的,所以即使不放回,每次取出来依然近似于独立重复实验。则 \(p = C_{10}^5 {\frac 1 2}^{10} \approx 0.246\).

10. If μ=0.9, what is the probability of ν=μ?

与上面类似:\(p = C_{10}^9 {0.9}^9 \times 0.1 \approx 0.387\).

11. If μ=0.9, what is the actual probability of ν≤0.1?

这个问题就上面来说稍微复杂了一点,但是也不难。v≤0.1也就是10个中抽到了1个或者0个。 \(p = C_{10}^9 {0.9}^1 \times 0.1^9 + C_{10}^{10} {0.1}^{10} = 9.1 \times 10^{-9}\).

12. If μ=0.9, what is the bound given by Hoeffding's Inequality for the probability of ν≤0.1?

由题目可以知道\(\epsilon\) = 0.8,带入公式可以得到概率上界为\(2e^{-12.8} \approx 5.52 \times 10^{-6}\)

Questions 13-14 illustrate what happens with multiple bins using dice to indicate 6 bins. Please note that the dice is not meant to be thrown for random experiments in this problem. They are just used to bind the six faces together. The probability below only refers to drawing from the bag.

13. Consider four kinds of dice in a bag, with the same (super large) quantity for each kind.

A: all even numbers are colored orange, all odd numbers are colored green

B: all even numbers are colored green, all odd numbers are colored orange

C: all small (1~3) are colored orange, all large numbers (4~6) are colored green

D: all small (1~3) are colored green, all large numbers (4~6) are colored orange

If we pick 5 dice from the bag, what is the probability that we get 5 orange 1's?

简单翻译下题目:袋子里有4种骰子,第一种2,4,6面为橘色,第二种1,3,5面为橘色,第三种1,2,3面为橘色,第四种4,5,6面为橘色。4种筛子比例相同,骰子数目很多。第一道题目问到,拿5个骰子出来,5个骰子第一面都是橘色的概率?

1面是橘色,我们可以将上面4类分成2类了,其中第二与第三合并,每次取出来1面为橘色的概率是0.5,所以5个都是的概率是\(\frac 1 {32}\)。

14. If we pick 5 dice from the bag, what is the probability that we get "some number" that is purely orange?

我们拿出5个骰子,至少有一面全部都是橘色的概率。

这个就是稍微复杂的一个问题。首先观察骰子种类,我们发现,只要第一种与第二种碰面,或者第三种与第四种碰面,那么就不可能有一面全都是橘色。所以我们要求的就是上面两种情况不发生的概率。

如果抽出的5个骰子,占了4种骰子种的3种或者4种,那么上面的情况至少会有一种会发生。 而取5次取出3种的情况有2 2 1与 3 1 1两种可能。

首先,从4种里选3种出来\(C_4^3\),其次,考虑2 2 1的情况\(C_5^2C_3^2\),而2 2 1又会有3种分布,因此2 2 1的所有可能情况是\(3C_4^3C_5^2C_3^2 = 360\).

3 1 1与上述类似\(3C_4^3C_5^3C_2^1 = 240\).

然后考虑从4种取4种的情况,只会有一种分布:1 1 1 2,可以得到\(4C_5^2C_3^1C_2^1 = 240\).

最后我们就要考虑到从4种中取出来两种,而且恰好是第一种与第二种,或者第三种与第四种的概率。骰子有两种的次数分布有2种情况:1 4,2 3.

1 4: 2\(C_5^4 = 10\)

2 3: \(2C_5^3 = 20\)

考虑到第一种与第二种,第三种与第四种,因此结果还应乘2,最后结果是:60.

所以,所有不符合的情况共有240+240+60+360 = 900,因此这道题目最后结果是\(1 - \frac {900} {4^5} = \frac {31} {256}\).

For Questions 15-20, you will play with PLA and pocket algorithm.

15-20为编程题目,需要自己写代码验证,然后得到结果。

15. First, we use an artificial data set to study PLA. The data set is in data.Each line of the data set contains one (\(\mathbf{x}_n, y_n\)) with \(\mathbf{x}_n \in \mathbb{R}^4\). The first 4 numbers of the line contains the components of \(\mathbf{x}_n\) orderly, the last number is \(y_n\). Please initialize your algorithm with \(\mathbf{w} = 0\) and take \(sign(0)\) as -1. Please always remember to add \(x_0 = 1\) to each \(\mathbf{x}_n\).Implement a version of PLA by visiting examples in the naive cycle using the order of examples in the data set. Run the algorithm on the data set. What is the number of updates before the algorithm halts?

a.<10 updates

b.11 - 30 updates

c.31 - 50 updates

d.\(\geq\) 201 updates

e.51 - 200 updates

这道题目只需要应用上次实现的PLA算法,需要额外做的是数据的读取。在数据读取时候我运用了正则表达式来进行分割,整个过程整合在一个叫做readDataFrom函数中。

1

2

3

4

5

6

7

8

9

10

11

12def readDataFrom(filename):

result = []

separator = re.compile('\t|\b| |\n')

with open(filename,'r') as f:

line = f.readline()

while line:

temp = separator.split(line)[0:-1]

abc = [float(x) for x in temp]

result.append(abc)

line = f.readline()

return result1

修正次数: 61

16. Implement a version of PLA by visiting examples in fixed, pre-determined random cycles throughout the algorithm. Run the algorithm on the data set. Please repeat your experiment for 2000 times, each with a different random seed. What is the average number of updates before the algorithm halts?

a.<10 updates

b.11 - 30 updates

c.31 - 50 updates

d.\(\geq\) 201 updates

e.51 - 200 updates

这个版本需要对原来的pla算法进行简单的修改,每次寻找预测错误的样本时候采用随机的顺序去寻找。对于序列进行随机的办法实现方法很简单,也就是打乱序列,做法是与随机的坐标进行交换:

1

2

3

4

5

6

7

8

9def randomIndex(n):

index = [i for i in range(0,n)]

def swap(l,x,y):

l[x] = l[x]+l[y]

l[y] = l[x] - l[y]

l[x] = l[x] - l[y]

for i in range(0,n):

swap(index,i,int(random.random()*n))

return index1

修正次数: 61.3933

17. Implement a version of PLA by visiting examples in fixed, pre-determined random cycles throughout the algorithm, while changing the update rule to be \[ \mathbf{w}_{t+1} \leftarrow \mathbf{w}_t +\eta y_{n(t)}\mathbf{x}_{n(t)} \] with η=0.5. Note that your PLA in the previous Question corresponds to η=1. Please repeat your experiment for 2000 times, each with a different random seed. What is the average number of updates before the algorithm halts? a.<10 updates

b.11 - 30 updates

c.31 - 50 updates

d.\(\geq\) 201 updates

e.51 - 200 updates

只需要对上述算法进行简单改动即可.运行2000次后平均修正次数如下:

1

修正次数: 63.532

18. Next, we play with the pocket algorithm. Modify your PLA in Question 16 to visit examples purely randomly, and then add the "pocket" steps to the algorithm. We will use train as the training data set \(\mathcal{D}\), and test as the test set for "verifying'' the gg returned by your algorithm (see lecture 4 about verifying). The sets are of the same format as the previous one. Run the pocket algorithm with a total of 50 updates on \(\mathcal{D}\) , and verify the performance of \(\mathbf{w}_{POCKET}\) using the test set. Please repeat your experiment for 2000 times, each with a different random seed. What is the average error rate on the test set?

<0.2

0.2 - 0.4

0.4 - 0.6

\(\geq\) 0.8

0.6 - 0.8

这个题目需要实现pocket算法。pocket算法之前没有介绍,因为它和pla很像,只是pla算法的一个变形。因为我们无法保证数据一定是线性可分的,为此我们每次遇到错误更新后,与之前的w进行对比,如果错误率更低,再更新这个参数,同时pla算法选择错误的样本时候是随机顺序选择的,从之前的代码验证中我们也发现了随机对于减小修正次数来说是有好处的(但是生成随机序列同样也会带来额外开销)。

实现pocket算法只需要多加几行代码以及做少量改动,这里就不列出来了。值得注意的是需要添加一个新的函数,进行错误评估:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17def computeER(para,datas):

size = len(datas)

if size <= 1:

return;

dms = len(datas[0])

if dms == 0:

return;

count = 0

for i in range(0, size):

p = 0

for x in range(0, dms - 1):

p += para[x] * datas[i][x]

p += para[-1]

if p <= 0 and datas[i][-1] > 0 or p > 0 and datas[i][-1] < 0:#ignore

count=count+1

return count/size1

平均错误率: 0.12437000000000001

19. Modify your algorithm in Question 18 to return \(\mathbf{w}_{50}\) (the PLA vector after 5050 updates) instead of \(\hat{\mathbf{w} }\) (the pocket vector) after 50 updates.Run the modified algorithm on \(\mathcal{D}\), and verify the performance using the test set.Please repeat your experiment for 2000 times, each with a different random seed. What is the average error rate on the test set?

<0.2

0.2 - 0.4

0.4 - 0.6

\(\geq\) 0.8

0.6 - 0.8

这个算法返回pla向量。运行结果如下: 1

平均错误率: 0.3666599999999999

**20. Modify your algorithm in Question 18 to run for 100 updates instead of 50, and verify the performance of $mathbf{w}_{POCKET}$ using the test set. Please repeat your experiment for 2000 times, each with a different random seed. What is the average error rate on the test set?**

<0.2

0.2 - 0.4

0.4 - 0.6

\(\geq\) 0.8

0.6 - 0.8

很简单的改动,运行结果如下: 1

平均错误率: 0.10796000000000007

Note:

刚开始对pocket算法的理解有误,以为wt的更新是每次都对pocket中的w为基准的,实际上它就是正常的pla算法运行的过程,仔细思考这样确实比原来的算法更快。第二,pocket算法中随机选取错误意味着每次都要随机打乱样本,这样可以获得更低的错误率。

python不支持i++,++i这种操作,但是,为何不报错...

list直接赋值只是对源对象进行了引用,同时有浅拷贝与深拷贝之分。这个狠狠地坑了我一把。

15,16,17题目前运行依然是60多次.答案是31到50。------ 更新于8.8。 我忽略了cycle这个词,也就是找错误的过程应该从上次断掉的地方继续开始,更新后得到正确的结果。 受到影响的代码重新跑了一遍:

代码上传至github,修改了上次可视化的内容,不过只是修改了接口内容。1

2

315. 修正次数: 46

16. 修正次数: 40.867

17. 修正次数: 41.5105

代码几乎没怎么写注释,这是需要改进的地方。